5 interesting charts on AI

Trends across semiconductors, AI application pricing, and electricity demand

For this post, I’m trying something different. I recently hosted an AI “Charts and Tarts”1 event where people brought an interesting chart about AI and we served some tarts. Below are some of those charts with my key takeaways. Reach out if you want to be included in the next one!

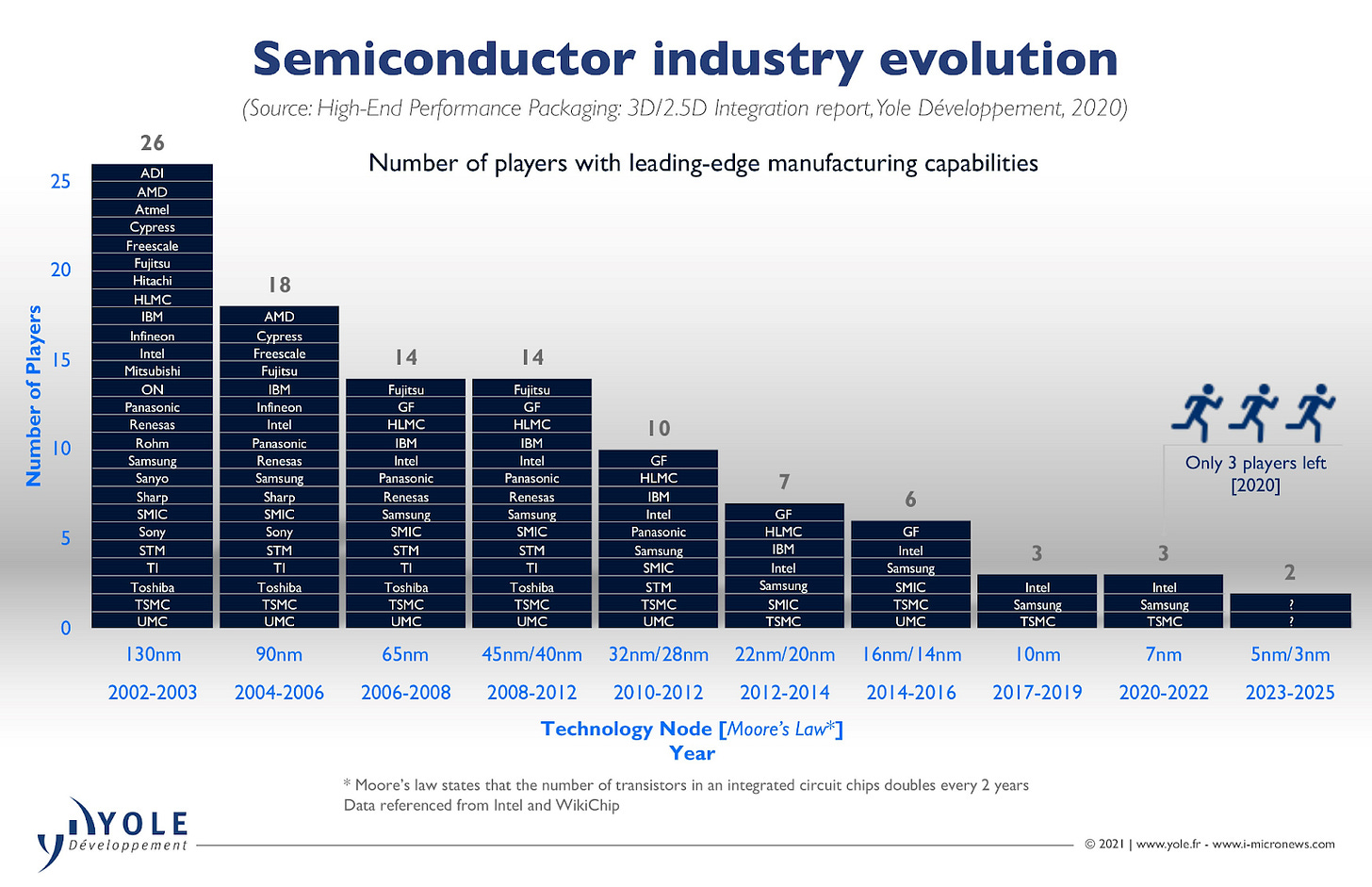

1) The semiconductor industry is consolidating

The semiconductor industry has been consolidating. As of 2022, there were only three companies (TSMC, Samsung, Intel) with leading edge manufacturing capabilities, the type needed to produce the most advanced chips used for AI today. That’s down from 6 in 2016 and 26 in 2003.

In terms of market share, things are even more stark with TSMC having a near-monopoly 94% of the market. A key reason for this happening is the increasing complexity of semiconductor production. Everyone knows Moore’s Law, but what about Moore’s Second Law? It has also held and says “the cost of a semiconductor chip fabrication plant doubles every four years,” which increases the barriers to entry for any company trying to compete with TSMC.

Monopolies or near monopolies are occurring in other parts of the semiconductor supply chain too.

The Electronic Design Automation (EDA) software market, the software needed to design these increasingly complex semiconductors, has seen huge consolidation with Cadence and Synopsys headed towards a duopoly.

The Dutch company ASML has a monopoly on the Extreme Ultraviolet Lithography market (it’s the world’s only supplier!).

And Nvidia, obviously, with its near monopoly on GPUs used for training AI models.

There are obvious investment implications from this type of consolidation, as the stock prices for all these companies have risen significantly in recent years. But the other thing our group debated was whether this is sustainable: Can we really risk having this technological revolution be bottlenecked by individual companies along the supply chain?

2) Our electricity use has stagnated

Everyone knows that AI scaling will require a significant amount of electricity from data centers. Goldman Sachs expects data centers to make up 8% of total power demand in 2030 (up from 3% today) due to demands from AI, but plenty of AI optimists think this is severely underestimating the need.

What’s less appreciated is that the amount of electricity we have been producing (and using) has stagnated for decades. This stagnation is a breaking of the “Henry Adams Curve”, which is the long-term trend of about 7% annual growth in energy usage per capita since 1800 (coined in this great book “Where Is My Flying Car?”).

Why has this happened? We’ve certainly gotten more “efficient” but, as the book notes, we’ve also radically shifted our mentality about energy consumption and innovation during the 1960s/70s. Gone were the visionary futures from the 1950s of innovations, particularly nuclear energy, making energy “too cheap to meter.” Instead, we got the environmental movement, the oil price shock, and anti nuclear weapon activists adding nuclear energy to their grievances2. I don’t see a way towards fulfilling the optimistic projections for AI without reversing this mentality.

3) AI application pricing remains stuck in SaaS land

Source: How AI apps make money (Kyle Poyar and Palle Broe)

Despite a lot of discussion on the death of SaaS and things like seat based pricing, AI applications today are priced… pretty similarly to SaaS! Most are still subscription based (not usage based), charge based on the number of users, have free plans to use and offer a similar tiered pricing model.

That said, our “Charts and Tarts” group predicted this will change and it was only a matter of time. One emerging example is customer support companies testing out pricing models where they charge per ticket their AI solves (Zendesk, Intercom).

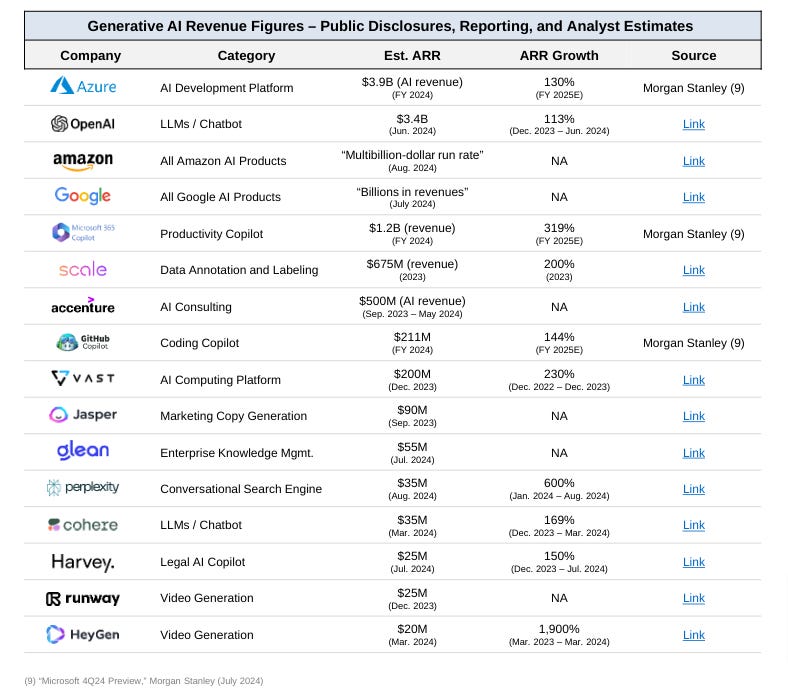

4) GenAI Revenue is still missing from the application layer

Outside of the semiconductor industry (mentioned earlier in this piece), the chart above shows very few companies are making substantial revenue from AI today. And for many of the ones that are, it’s often companies involved in the building/running of the AI models (cloud, data annotation) as opposed to end users buying application products. As a result, unlike Cloud/SaaS, all of the profits in AI so far are going to semiconductor or infrastructure companies..

This isn’t news to most, but it’s interesting to see all the companies ranked top to bottom. The group was most bullish on enterprise application startups joining the list in the next few years, particularly for use cases which are big today in SaaS but missing here (ex: Cybersecurity).

5) Can models be more efficient when they don’t have to memorize?

Source: Improving language models by retrieving from trillions of tokens

My friend (and attendee) Rob has been obsessed with this paper from 2021 for years. This ones a bit more technical, but bear with me.

DeepMind developed a language model with native external retrieval (i.e., available at both training and inference time; standard RAG, by contrast, involves retrieval only during inference after a model has been trained). The way to think about this chart is that the y axis shows model performance (smaller number = better) and the x axis shows model size (more parameters = bigger model). The chart shows that smaller models which learn to retrieve documents from an external database (“Retro=On”) perform similarly to order-of-magnitude larger models which don’t, since retrieval avoids having to memorize obscure things and enables reallocating compute/memory.

We discussed whether the future is likely to bring smaller models better at tool use (ex: the direction Apple is going in its recent Apple Intelligence announcements). After all, this is not too dissimilar from our brains today. We are much worse at memorizing information than AI models but highly effective at finding information when we need it.

Thanks to Kasra for his helpful feedback.

I’ve found rhyming titles are as important as the event content itself in getting people to come.

From the book: “It was the anti-nuclear weapons movement that morphed into the anti-nuclear-power-movement. Activists pushed the notion, and many probably believed it themselves, that power plants were giant nuclear bombs waiting to explode at a moment’s notice and drench the world in fallout. As we have seen, the fact that this was completely untrue at the time, and has since become even less statistically plausible as greater safety protocols have been introduced, didn’t seem to faze the activists in the slightest or impede the growth or influence of their movement.”